Lawyers for Elon Musk recently made an argument during a deposition for a lawsuit related to the 2018 crash of a Tesla Model X that was using Autopilot that the statement allegedly made by Musk in 2016 claiming that “a Model S and Model X, at this point, can drive autonomously with greater safety than a person.” simply can’t be trusted as real because of the prevalence of deepfakes against popular personalities. This, despite the fact that the video in question with Musk’s statement was apparently recorded at a live conference with multiple witnesses and the event occurred 6 years ago (with the video being available that whole time on YouTube), well before AI was at the point where it could possibly have faked the video to the degree being claimed. Instead of straight up answering the question, the lawyers stated:

While at first glance it might seem unusual that Tesla could not admit or deny the authenticity of video and audio recordings purportedly containing statements by Mr. Musk, the reality is he, like many public figures, is the subject of many 'deepfake' videos and audio recordings that purport to show him saying and doing things he never actually said or did.

Image: Wikipedia

They openly admit that they can neither confirm nor deny the claim, but they don’t outright come out and say it’s not him, just that you can’t trust that it is him (The old, “I don’t recall saying that”). Of course, I too expect the story to change once someone is under oath on the witness stand and/or witnesses to the act are marched into court if it gets that far. I personally believe this approach is designed to instill doubt and that it lacks in personal courage or accountability for actions allegedly previously taken. Luckily, it looks like the judge isn’t buying the argument.

But instead of pursuing that thread further I want to explore the idea of what could happen today in this arena by answering this question:

What is the possibility and likelihood of tools being available in the public’s hands (or in a billionaire’s hands) that are good enough to create realistic deepfakes that can fool the general populace at quick glance, let alone survive judicial and forensic scrutiny?

I’m not talking about a person that is really good with photoshop on still images (Graphika demonstrates some methods for how these can be detected) or even MidJourney or Dall-E AI recently creating somewhat photorealistic shots of Trump or the Pope that we all saw so much of, but rather generating live action video whether in a studio or in front of a live audience that is indistinguishable from what you’d see with your own eyes. SPOILER ALERT: If you watched Succession Season 4, episode 6 last night you’ll know exactly what I’m talking about. Right off the front pages……

To some degree, the ability to manipulate videos by real humans using cutting edge tools has been around for several years now, and some of these artists are pretty good. Take for example Mashable’s story on 13 video deepfakes of TV and movie stars. And we’ve all seen the many Tom Cruise deepfakes on YouTube.

The next iteration is AI-based avatars where content is loaded into a virtual avatar (select one of 100+ models) and the output looks almost indistinguishable from a real human. Synthesia has mastered this capability and we’ve seen their AI avatar product used to create pretty realistic anti-American propaganda “news stories”.

Taking things to the next level, Chinese company Tencent has recently announced that they have created a Deepfake as a Service platform that allows users to create an AI-generated 3D photorealistic video of a real person for 1,000 yuan (around $145), using 3 minutes of the target person’s video and a 100-sentence vocabulary. Creating the video takes about 24 hours with English and Chinese as the target languages.

What makes the discussion even more interesting is that while Musk’s lawyers are very likely just throwing statements out there to see what sticks, they aren’t the only one’s concerned. In March 2021, the FBI published a Traffic Light Protocol (TLP): White Private Industry Notification (PIN) warning about the use of synthetic content for cyber and foreign influence operations, and in Feb 2022 the Department of Homeland Security also issued a TLP: White Alert CISA Insights: Preparing for and Mitigating Foreign Influence Operations Targeting Critical Infrastructure, stating:

Malicious actors use influence operations, including tactics like misinformation, disinformation, and malinformation (MDM), to shape public opinion, undermine trust, amplify division, and sow discord.

So, to answer the question I asked earlier, I believe the possibility and probability of tools being available and being actively exploited for malicious purposes is not only high but has been positively demonstrated. We’re living in tomorrow today. However, as much as this technology could be used for some very nefarious purposes, I’m not opposed to the technology itself and the capabilities it brings, just how it’s manipulated for misinformation or worse.

What I do believe should happen in a very immediate manner though is the widespread adoption of blockchain-based digital identity for persons and blockhcain-based watermarking for digital videos. Because while deepfakes are inevitable, and will only grow in their realism, this should not imply that we will automatically be unable or unprepared to separate them from content that is published by legitimate and original sources. This isn’t the answer to all problems related to deepfakes of course but attributing content to real authors and validating the authenticity of content is a start. Privacy problems related to anonymous whistleblower evidence and related scenarios will still exist and I think I’ll be taking this topic on in a near future article.

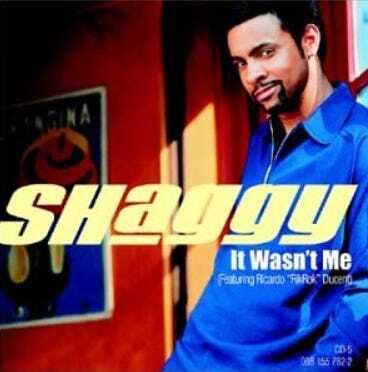

Until then, as Shaggy said “…Seeing is believing so you better change your specs...”